Getty Images

- Instagram is a fertile ground for extremist propaganda, experts told Insider.

- Neo-Nazi groups are using the social media platform to recruit teenagers, the experts said.

- Parents are now being advised to spot far-right messaging to protect their kids.

- See more stories on Insider's business page.

"My kids have had a pretty progressive upbringing," Joanna Schroeder told Insider. "So it was pretty shocking to me when I started looking over their shoulders to see that there was some really disturbing content showing up on their Instagram feeds."

Schroeder, a parenting writer from Los Angeles, was troubled by the photos and videos being recommended to her two teenage sons on Instagram. "There was alt-right and borderline Holocaust-denial stuff, memes, showing up on there," Schroeder said.

While Schroeder was shocked and appalled, the abundance of far-right content on the photo and video sharing platform is well-known to researchers.

Instagram has allowed itself to emerge as fertile ground for extremist propaganda, experts on extremism told Insider.

"Instagram is actively pulling its predominantly young users down an extremist rabbit hole,' Imrah Ahmed, CEO of the Center for Countering Digital Hate, wrote in an email.

The rabbit hole, in some cases, leads to kids being introduced into neo-Nazi groups. So much so, in fact, that Instagram has become the primary platform for far-right groups to recruit vulnerable teenagers in recent years, several experts said.

'A premium on recruiting youth is really standard'

Instagram has become the "platform of choice for young Nazis to radicalize teenagers," according to the UK anti-racism charity Hope Not Hate's annual report. Neo-Nazi groups are using it to prey on vulnerable young people and sign them up to their extremist causes, the report said.

In the past year alone, Hope Not Hate found that two violent far-right groups have used Instagram as their primary mode of recruitment. The British Hand and the National Partisan Movement - two UK-based extremist groups - actively recruited teenagers on the app, the study found.

Three teenage boys, all alleged to be members of The British Hand, are now facing trial on terrorism charges.

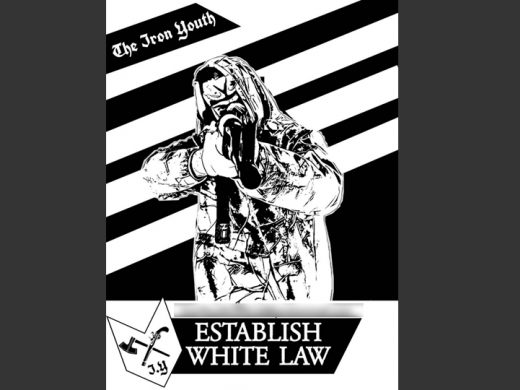

Similarly, in the US, a neo-Nazi group's presence on Instagram led to two young men's arrest. Both were involved in the hardcore, white supremacist Iron Youth group, according to the Anti-Defamation League.

ADL

One of the young men had shared Instagram posts urging fellow group members to kill Jewish and Black people, according to a court document.

"The idea that white supremacists and other far-right extremists would put a premium on recruiting youth is really standard," the Anti-Defamation League Center on Extremism's vice president, Oren Segal, told Insider. What is novel, he said, is how neo-Nazi groups are skillfully utilizing Instagram's functions.

"Extremists never miss an opportunity to leverage their hateful ideas through the lens of the latest technology," Segal explained.

Memes are an effective way to cloak more sinister views

The focus on visual media and the abundance of young users makes it "a great platform to push far-right propaganda which is stylized and punchy," said Hope Not Hate researcher Patrik Hermansson.

The punchiest way to garner attention from young people is through memes, Hermansson said. "Memes are easily consumable and they are funny and they're easy to share," he added. "They spread quickly and it exposes a lot of people to them."

Memes are also an effective way to cloak more sinister views under a layer of humor or irony, Hermansson said. "The humor makes them easier to swallow," he added.

Some of the more inoffensive memes use characters popular with the alt-right - Doge, Pepe the Frog, Cheems - to articulate controversial sentiments.

Patrik Hermansson

"What many extremists have learned is that explicit expressions of hatred may not attract as many people as more subtle references," the ADL's extremism expert said.

"It's a tried and true technique of how to win hearts and minds," Segal added. "You don't hit them over the heads with the hatred. You sort of slow roll that process."

'Instagram's algorithm leads users down rabbit-holes'

The "slow roll" process, which gradually introduces youngsters to more troubling material, is enabled by Instagram's algorithm, say experts. Liking a seemingly innocuous meme can, in turn, present the teenager with more radical content.

Schroeder saw this process unfold when she began to monitor her teenage sons' Instagram use.

"A kid might like something edgy, Pepe the Frog or something, and that triggers the algorithm," she said. 'That then sends them tumbling down into anti-feminist, racist, Holocaust denial, neo-Nazi type of content."

Wikimedia Commons

While the Instagram algorithm is relatively opaque and constantly evolving, it is known that the Explore page is a curated page of recommended content. The content is chosen "based on an individual's historical interactions," according to social media marketing company Later.

A March study by the Center for Countering Digital Hate found that users are directed to far-right content on the Explore page.

Liking content relating to any form of misinformation - election, vaccination, or race-based - leads to anti-Semitic and extremist content being promoted to the user, it found. "Instagram's algorithm leads users down rabbit-holes to a warren of extremist content," the study said.

CCDH

"Instagram's algorithm actively seeks out individuals who have not yet engaged with the extreme or radical accounts, but who have characteristics that the algorithm determines may find it appealing and then serves it to them," Angelo Carusone, president of the right-wing watchdog Media Matters, told Insider.

He believes that directing younger users to problematic users makes Instagram a recruiting sergeant for extremists.

"Instagram isn't merely hosting the content; it is actively building extremist movements by recruiting new adherents into the fold and connecting them with like-minded extremists," Carusone said.

Direct messaging can lead to 'grooming'

The DM feature allows users to send a message to any other use of the app and facilitates sending messages en masse aiding the indoctrination process, said Hope Not Hate's Hermansson.

"They [extremists] can directly get in touch with people and say, "why don't you join my group?'"

This can lead to "grooming," according to far-right researcher Miro Dittrich. "You see 30-somethings talking to 14-year-olds and kind of grooming them for the far-right ideology."

It's particularly hard for social media platforms to police private messages unless a user reports them, Dittrich noted.

While it is hard to moderate direct messaging, experts believe that moderation generally is insufficient on the apps.

"There's the question of how long viral content can stay up on the platform and, therefore, be exposed to a lot of people," Hermansson said. "On Instagram, it appears that it's too long. We see recruitment accounts for fascist groups that stay online for two months."

Instagram faces an even bigger challenge in spotting and removing harmful content published on Instagram Stories. The feature allows users to host videos for 24 hours before they disappear from a user's profile.

"I think they definitely have a problem with Instagram Stories," Dittrich said. "A lot more of the content that violates the terms of service is shared via Stories. I think that's a really hard space to moderate."

When accounts are locked, the content reaches fewer people but has less chance of being reported. "Accounts among the neo-Nazi radical front usually have a locked account, so it's not easy for people to flag stuff. Only the inner circle is allowed in these spaces," Dittrich added.

Parents have to warn their kids

Every expert Insider spoke to agreed that Instagram needs to speed up the moderation process and removing the odd post isn't enough.

"You can't just take down one player or delete one picture someone posted," Dittrich said. "You have to analyze and see that this is a network that all post content that leads to offline violence and do a systematic takedown."

Insider asked Instagram about its policy on extremist content but it did not respond to the request for comment.

The responsibility isn't entirely on Instagram, Dittrich told Insider. It also falls upon parents to be aware of the sort of content that their kids are consuming.

Hermansson agrees. "I think the solution to these issues comes down to the parents and schools because they are the closest to the kids," he said. "The more you know about the terminology and language of the far-right today, the easier it is to see the signs."

Schroeder, who has taken it upon herself to learn how to protect her kids said: "It's like teaching your kids to swim or teaching them to dial 911. They have to learn critical media skills, and they have to learn how to sniff out propaganda."